| enginuity |

|

home | contents | previous | next |

Speech recognition research

Over the last few years, a variety of commercial speech recognition packages have become available which allow users to dictate directly into their computers. Similar technology will soon be deployed in telephone networks where it will be used to provide a growing range of fully automatic services such as email reading, flight information and booking, weather reports, and traffic information - all accessible anywhere from any telephone.

These rapidly increasing applications of speech recognition technology have been made possible by research advances over the last decade many of which these originate from the Engineering Departmentís Speech, Vision and Robotics Group. Work on speech analysis began in the early 70s under the leadership of Professor Frank Fallside. Dr Steve Young (now Professor Young) started working more specifically on speech recognition at Cambridge in the late eighties. Working with his colleague, Phil Woodland, a major breakthrough was made in the early 90s in the methodology of acoustic modeling and this put the research of the Cambridge team in the forefront of speech recognition systems worldwide.

Progress in speech recognition over the last decade has been monitored by a series of annual evaluations run by the US National Institute of Standards and Technology and the US Defence Advanced Research Projects Agency. In the early 90s, the best recognisers in the world produced around 15% errors on a 20,000 word speaker-independent dictation task. In 1994, the HTK system developed at CUED recorded just 7% errors on a much harder unrestricted vocabulary task beating 14 other competing systems including ones from IBM, AT&T, Dragon, BBN Systems and various other universities.

T

T

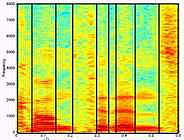

A speech spectogram

The HTK system effectively demonstrated that desktop dictation was possible and companies like Dragon and IBM subsequently converted these ideas into commercial reality. The CUED team continued to improve their system tackling harder tasks such as dictation in noise, and most recently transcription of broadcast news material. The latter is particularly difficult because the recogniser must cope with a sequence of unknown speakers, speaking over different channels with varying degrees of background noise including music and sound effects.

The quality of the work done by the CUED speech group has also attracted commercial interest. In 1995, Entropic Cambridge Research Ltd (ECRL) was formed as a collaboration between Entropic Research Laboratory Inc based in Washington DC and Cambridge University. In 1998, ECRL merged with the parent company and in November 1999, it was acquired by Microsoft. With Microsoft backing, Entropic is continuing to grow its operation in Cambridge and is now developing speech recognition server technology to enable direct access to web-sites using voice.

Although much has been achieved over the last decade, speech recognition technology is still limited and new research continues apace. Work on the core technology continues with research into new ways of building acoustic models and statistical grammars. The work on broadcast news transcription has been integrated with information retrieval algorithms to allow news stories to be retrieved from audio and video archives. New work has started on methods for building interactive dialogue systems and there is a growing emphasis on web-related applications.

| number 9, July 2000 | home | contents | previous | next |